DetectGPT#

DetectGPT: Zero-Shot Machine-Generated Text Detection using Probability Curvature by Mitchell et al. [2023]

The paper discusses the growing need for systems that can identify machine-generated text, as large language models (LLMs) become increasingly fluent and knowledgeable. The authors develop DetectGPT, a novel curvature-based criterion, which capitalizes on the observation that LLM-generated text falls within negative curvature regions of the model’s log probability function. DetectGPT eliminates the need for separate classifiers, datasets, or watermarking, and instead relies on log probabilities and random perturbations from another pre-trained language model. This approach proves to be more effective in detecting machine-generated fake news articles compared to existing zero-shot methods.

In summary:

Need for systems to detect machine-written text due to advanced LLMs

LLM-generated text found in negative curvature regions of log probability function

DetectGPT: new curvature-based criterion for identifying machine-generated text

No separate classifier, dataset, or watermarking needed

Uses log probabilities and random perturbations from another language model

DetectGPT outperforms existing zero-shot methods in detecting fake news articless

Introduction#

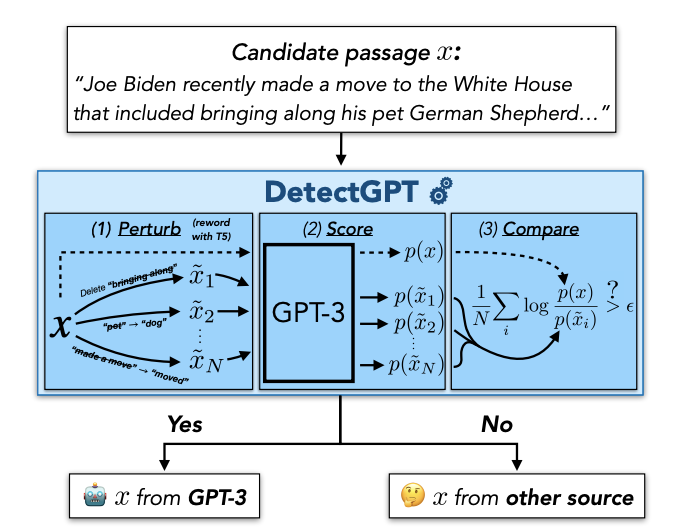

Fig. 132 An overview of the problem and DetectGPT#

LMs generate fluent responses to user queries, sometimes replacing human labor

Issues with LLM use in student essays, journalism, and factual accuracy

Humans struggle to differentiate machine-generated and human-written text

Binary classification problem: detect if a passage is generated by a specific source model

Zero-shot machine-generated text detection using the source model without adaptation

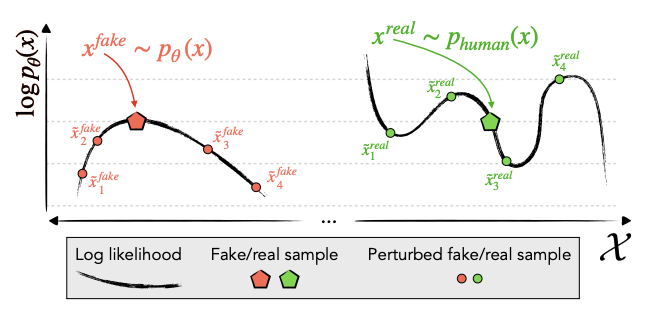

Fig. 133 An illustration of the underlying hypothesis#

Hypothesis: Model-generated text lies in areas with negative curvature in log probability function

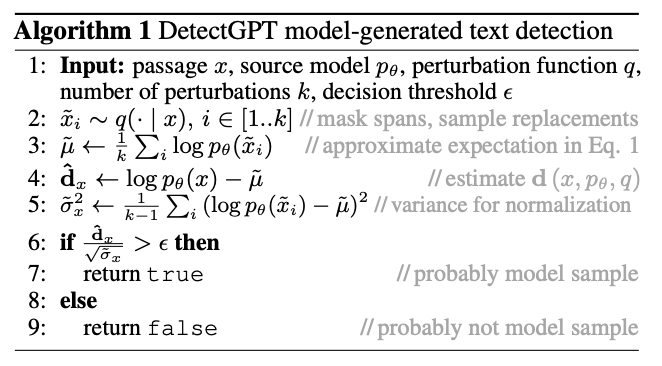

Fig. 134 Algorithm for DetectGPT#

DetectGPT: Zero-shot method for detecting machine-generated text

Compares log probability of original passage with perturbations under the source model

If perturbed passages have lower average log probability, passage likely generated by the source model

DetectGPT outperforms existing zero-shot methods in detecting machine-generated text

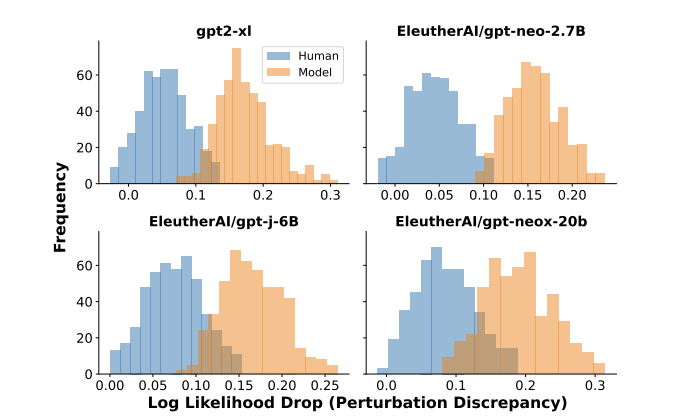

Fig. 135 Empirical evaluation of the hypothesis.#

Contributions:

Identification and validation of negative curvature hypothesis

Development of DetectGPT, an algorithm using log probability function’s Hessian to detect model samples

The Zero-Shot Machine-Generated Text Detection Problem#

Focus on zero-shot machine-generated text detection

Detect if a text is a sample from a source model without using human-written or generated samples

‘White box’ setting allows evaluating the log probability of a sample

Doesn’t assume access to model architecture or parameters

DetectGPT uses generic pre-trained mask-filling models

Generates ‘nearby’ passages to the candidate passage

No fine-tuning or adaptation to the target domain required

DetectGPT: Zero-shot Machine-Generated Text Detection with Random Perturbations#

DetectGPT hypothesis: machine-generated samples lie in areas of negative curvature of log probability function, unlike human text

Small perturbations to machine-generated passage should result in relatively large average differences in log probability compared to human-written text

Perturbation function \(q(\cdot | x)\) produces slightly modified versions of passage \(x\) with similar meaning

Perturbation discrepancy \(d(x, p_{\theta}, q)\) defined as the difference between log probability of original passage and the average log probability of perturbed passages