Topic Modeling#

Introduction#

As the digital age continues to expand, the volume of unstructured text data grows exponentially. From social media posts and news articles to research papers and customer reviews, there is a wealth of information waiting to be extracted and analyzed. Topic modeling emerges as a powerful tool to summarize and make sense of this large-scale unstructured text data, enabling users to better understand, navigate, and utilize the available information.

At its core, topic modeling is an unsupervised machine learning technique that employs the words within a document to infer its underlying subject. By identifying patterns of word co-occurrence and frequencies, topic models can reveal the latent structure or “topics” that characterize a collection of documents. This process not only allows for a high-level summary of the document’s content but also serves as a valuable method for dimension reduction.

Dimension reduction is crucial for simplifying complex data and enhancing the efficiency of subsequent analysis. In the context of text data, topic modeling excels at reducing the dimensionality of the document-word matrix by representing documents as mixtures of topics and topics as distributions over words. This condensed representation not only captures the essence of the document’s content but is also more interpretable compared to other dimension reduction techniques.

While other methods, such as Principal Component Analysis (PCA), are also used for dimension reduction, topic models offer a more interpretable and contextually meaningful output. PCA transforms the original data into orthogonal components that explain the maximum variance, which can be difficult to relate back to the original features. In contrast, topic models generate a set of human-understandable topics that provide a clear view of the document’s thematic structure.

In summary,

Summarize unstructured text: Topic modeling helps in making sense of large-scale unstructured text data, providing high-level summaries of documents’ content.

Infer subject using words: Analyzes patterns of word co-occurrence and frequencies to reveal latent topics characterizing a collection of documents.

Useful for dimension reduction: Represents documents as mixtures of topics and topics as distributions over words, simplifying complex data and enhancing analysis efficiency.

More interpretable than PCA: Generates human-understandable topics that provide a clear view of the document’s thematic structure, compared to PCA’s orthogonal components which can be difficult to relate back to original features.

Document Clustering#

Document clustering is a technique used in text mining and natural language processing to group documents based on their content or other features. This can help in organizing, summarizing, and extracting meaningful information from large collections of text. Some common applications include topic modeling, document classification, and information retrieval.

Cosine Similarity#

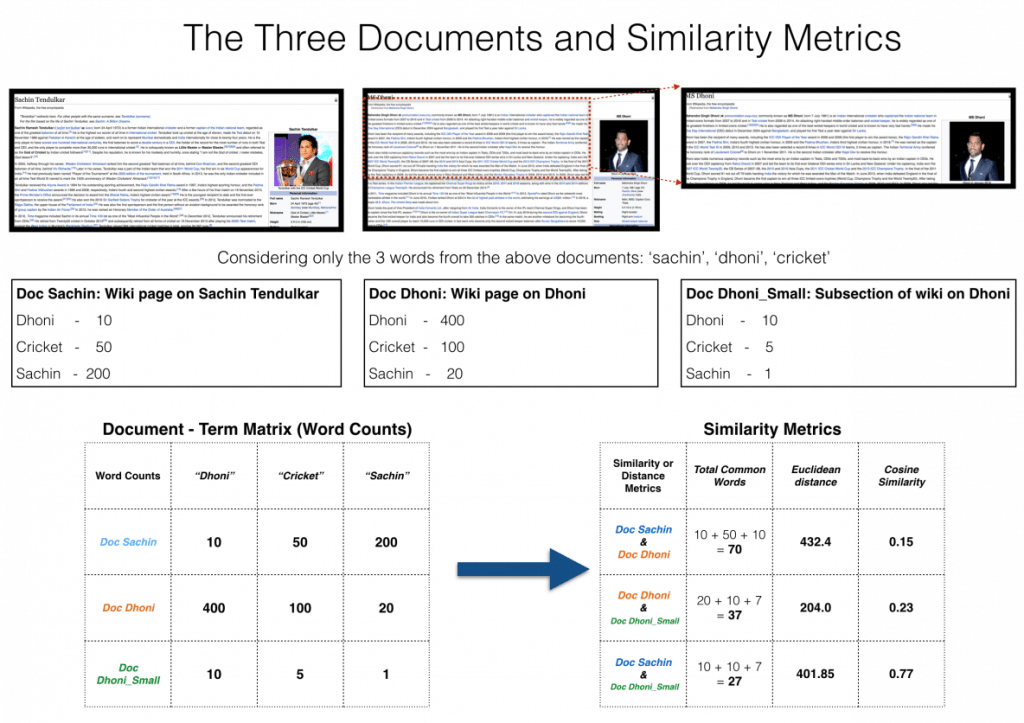

Cosine similarity is a popular similarity measure used in text mining and information retrieval. It measures the cosine of the angle between two non-zero vectors, with a range between -1 and 1. In the context of document clustering, each document is represented as a vector of term frequencies or other weights, and the cosine similarity quantifies the similarity between two documents.

The cosine similarity between two vectors A and B can be calculated as follows:

Fig. 39 Cosine Similarity Example#

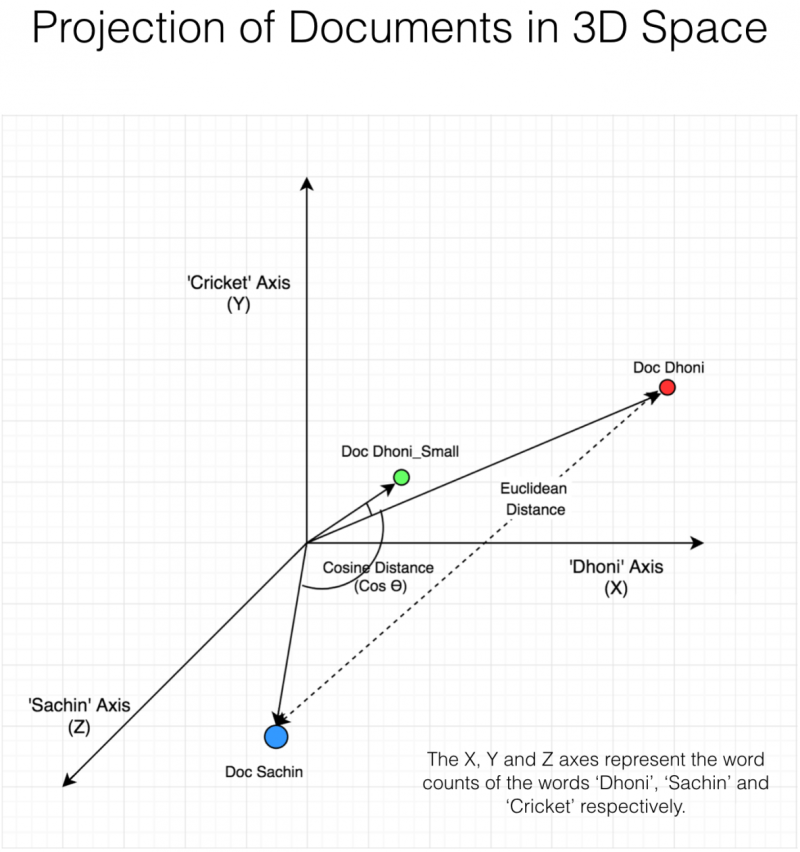

Fig. 40 Projecting Documents onto a 3D Space#

Dimensionality Reduction#

Before applying clustering algorithms to document collections, it is often beneficial to perform dimensionality reduction. High-dimensional data can be noisy and may result in poor clustering performance due to the curse of dimensionality. Dimensionality reduction techniques, such as PCA, SVD, or t-SNE, can help in reducing the number of dimensions while preserving the essential structure and relationships in the data. This can lead to more efficient and accurate clustering results.

Clustering Algorithms#

There are several clustering algorithms that can be applied to document clustering. Some popular ones include:

K-means: K-means is a widely-used partitioning clustering algorithm that aims to minimize the sum of squared distances between data points and their respective cluster centroids. K-means requires the number of clusters (K) to be specified beforehand and is sensitive to the initial placement of cluster centroids.

DBSCAN: Density-Based Spatial Clustering of Applications with Noise (DBSCAN) is a density-based clustering algorithm that identifies clusters based on the density of data points in a region. DBSCAN does not require the number of clusters to be specified beforehand and can handle noise and outliers effectively.

Agglomerative Hierarchical Clustering: This is a bottom-up approach to hierarchical clustering that starts with each data point as its own cluster and iteratively merges the closest pair of clusters until only one cluster remains. The result is a tree-like structure, called a dendrogram, that can be cut at different levels to obtain the desired number of clusters.

Latent Dirichlet Allocation (LDA): LDA is a generative probabilistic model for collections of discrete data, such as text corpora. It is particularly useful for topic modeling, where the goal is to discover the underlying topics in a document collection. LDA represents documents as mixtures of topics and topics as mixtures of words, and it learns these representations using a Dirichlet prior.

To perform document clustering, first preprocess the text data (e.g., tokenization, stemming, and removing stop words), convert the documents into a suitable vector representation (e.g., TF-IDF or word embeddings), and then apply the desired clustering algorithm to group similar documents together.