Decoding and Search Strategies#

In recent years, there has been a surge of interest in open-ended language generation due to the development of large language models (LLMs) like GPT-2, XLNet, OpenAI-GPT, CTRL, TransfoXL, XLM, BART, T5, GPT-3, and BLOOM. These models have shown promising results in various generation tasks such as open-ended dialogue, summarization, and story generation. Improved decoding methods have played a significant role in the success of these models.

Auto-regressive language generation assumes that the text being generated can be broken down into a sequence of subparts. Each part depends on the previous parts, allowing an auto-regressive decoder to generate text one token at a time based on its predecessors.

The probability of generating a word sequence \(w_{1:𝑇}\) given an initial context word sequence \(W_0\) can be expressed as:

Here, \(W_0\) is the initial context word sequence. The length 𝑇 of the word sequence is determined on-the-fly, which means it is decided as the sequence is generated. The sequence generation typically stops when an End-Of-Sequence (EOS) token is generated from the probability distribution \(𝑃(w_t|w_{1:t−1},W_0)\).

This auto-regressive approach allows LLMs to generate coherent and contextually relevant text based on the initial context 𝑊0. Different decoding strategies, such as greedy search, beam search, and sampling methods like top-k sampling, have been used to improve the generation quality and diversity.

For example, consider a language model generating a story based on the context “Once upon a time, in a small village”:

Greedy search would select the word with the highest probability at each step, potentially leading to repetitive and less diverse text.

Beam search would maintain a fixed number of partial sequences (the beam width) and extend them, selecting the most probable overall sequence. This can improve diversity but may still suffer from repetitiveness.

Top-k sampling would sample the next word from the top-k most probable words, increasing diversity in the generated text.

These strategies help LLMs generate meaningful and diverse text for various language generation tasks.

# If you run this notebook in Colab, set Hardware accelerator to GPU.

# Then, install transformers

%pip install -U transformers tensorflow

import tensorflow as tf

from transformers import TFGPT2LMHeadModel, GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

# add the EOS token as PAD token to avoid warnings

model = TFGPT2LMHeadModel.from_pretrained("gpt2", pad_token_id=tokenizer.eos_token_id)

All model checkpoint layers were used when initializing TFGPT2LMHeadModel.

All the layers of TFGPT2LMHeadModel were initialized from the model checkpoint at gpt2.

If your task is similar to the task the model of the checkpoint was trained on, you can already use TFGPT2LMHeadModel for predictions without further training.

Greedy Search#

Greedy search is a decoding strategy used in language generation tasks. It works by selecting the word with the highest probability as the next word in the generated sequence, given the previous words.

In each step of the generation process, the model computes the probabilities of all possible words, given the context of the previously generated words. Greedy search then chooses the word with the highest probability and appends it to the output sequence. This process is repeated until a predefined stopping condition is met, such as reaching the maximum output length or generating an end-of-sentence (EOS) token.

While greedy search is computationally efficient and straightforward to implement, it has some drawbacks. The main limitation is that it can generate suboptimal output sequences since it doesn’t explore other possible word combinations. It always chooses the locally optimal word without considering the global context, which might lead to less coherent or less diverse generated text. Other search strategies, like beam search or nucleus sampling, can help overcome these limitations by exploring a larger space of possible output sequences.

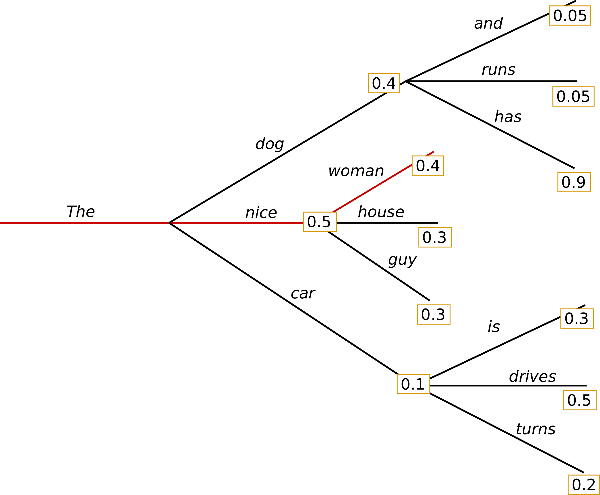

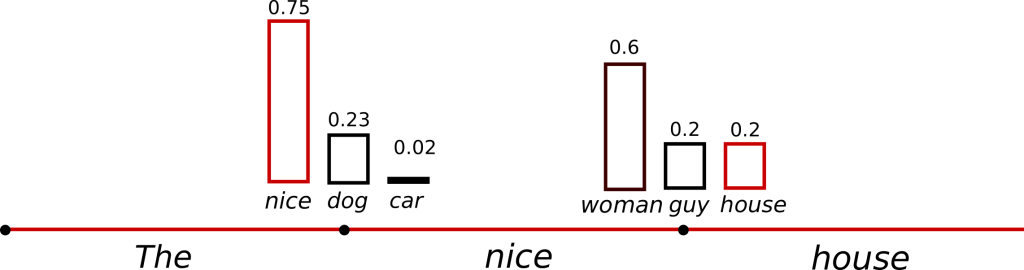

Fig. 90 Greedy Search Example#

The next word is chosen using the formula \(w_t = \operatorname{argmax}_{w}P(w | w_{1:t-1})\) at each timestep \(t\), where \(w_t\) is the next word and \(w_{1:t-1}\) are the previous words in the sequence.

For example, starting with the word “The”, the algorithm evaluates the probabilities of all possible next words and greedily selects the one with the highest probability, such as “nice”. The process is repeated to generate the subsequent words in the sequence. In this case, the final generated word sequence is (“The”, “nice”, “woman”). The overall probability of this sequence is calculated by multiplying the probabilities of each chosen word, which is \(0.5 \times 0.4 = 0.2\) in this example.

While greedy search is computationally efficient and easy to implement, it has some limitations. The algorithm always chooses the locally optimal word without considering the global context, which might lead to less coherent or less diverse generated text. Other search strategies, like beam search or nucleus sampling, can help overcome these limitations by exploring a larger space of possible output sequences.

# encode context the generation is conditioned on

input_ids = tokenizer.encode(

"I enjoy studying deep learning for natural language processing",

return_tensors="tf",

)

# generate text until the output length (which includes the context length) reaches 50

greedy_output = model.generate(input_ids, max_length=100)

print("Output:\n" + 100 * "-")

print(tokenizer.decode(greedy_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy studying deep learning for natural language processing, but I'm not sure how to apply it to real-world applications.

I'm not sure how to apply it to real-world applications. I'm not sure how to apply it to real-world applications. I'm not sure how to apply it to real-world applications. I'm not sure how to apply it to real-world applications. I'm not sure how to apply it to real-world applications. I'm not

Generating word sequences with GPT-2: To generate word sequences using GPT-2, you provide a context, such as (“I”, “enjoy”, “studying”, “deep”, “learning”, “for”, “natural”, “language”, “processing”), and the model predicts the most likely words to follow the given context.

Repetitive output: A common issue in language generation, especially when using greedy or beam search, is that the model often starts repeating itself. This occurs because these search strategies tend to get stuck in a loop of selecting locally optimal words without considering the broader context.

Drawbacks of greedy search:

Misses high probability words: Greedy search can miss high probability words that are hidden behind a low probability word. Since the algorithm always chooses the word with the highest probability at each step, it doesn’t explore other word combinations that could lead to better overall sequences.

Lack of diversity: Greedy search may generate less diverse and less coherent text, as it only focuses on the locally optimal choice. Other search strategies, like beam search or nucleus sampling, can help mitigate these issues by exploring a larger space of possible output sequences and considering global context.

Beam search#

Beam search is a decoding strategy used in language generation tasks to generate more diverse and coherent text compared to greedy search. It is a type of breadth-first search algorithm that maintains a fixed number of candidate sequences, called “beams,” at each step of the generation process.

The main idea behind beam search is to explore multiple word options at each step, rather than choosing only the word with the highest probability, as in greedy search. The algorithm starts by selecting the top k words with the highest probabilities, where k is the beam size. It then extends each of these words with the top k words, given the context. This results in k * k new candidate sequences. The algorithm keeps only the top k sequences with the highest overall probabilities and discards the rest.

The process is repeated at each timestep until a predefined stopping condition is met, such as reaching the maximum output length or generating an end-of-sentence (EOS) token. The candidate sequence with the highest overall probability is selected as the final output.

Beam search offers a balance between computational complexity and the quality of generated text. A larger beam size increases the chances of finding better output sequences but also increases the computational cost. On the other hand, a smaller beam size is more computationally efficient but may generate less diverse and less coherent text.

In summary, beam search is a decoding strategy that explores multiple word combinations during text generation, which can lead to more diverse and coherent output compared to greedy search. However, it comes at the cost of increased computational complexity, depending on the beam size.

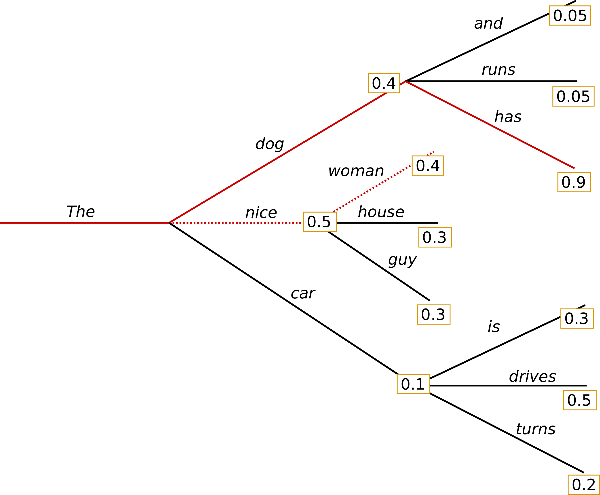

Fig. 91 Beam Search Example#

Beam search with

num_beams=2: Beam search is a decoding strategy that considers multiple hypotheses during text generation. In this example, we set the beam size to 2, meaning the algorithm will keep track of the two most likely word sequences at each timestep.At timestep 1: Instead of only considering the most likely hypothesis (“The”, “nice”), as in greedy search, beam search also maintains the second most likely hypothesis (“The”, “dog”).

At timestep 2: Beam search evaluates the probabilities of extending both hypotheses with the top two words. It finds that the word sequence (“The”, “dog”, “has”), with a probability of 0.36, is more likely than (“The”, “nice”, “woman”), which has a probability of 0.2.

Optimal solution found: In this toy example, beam search successfully discovers the most likely word sequence, which was missed by the greedy search.

Comparison to greedy search: Beam search generally finds output sequences with higher probabilities than greedy search. However, it’s not guaranteed to always find the most likely output, especially for large search spaces or small beam sizes. The quality of the generated text depends on the beam size, with larger beam sizes typically producing better results at the cost of increased computational complexity.

Set

num_beams > 1andearly_stopping=Trueso that generation is finished when all beam hypotheses reached the EOS token

# activate beam search and early_stopping

beam_output = model.generate(

input_ids,

max_length=100,

num_beams=5,

early_stopping=True,

)

print("Output:\n" + 100 * "-")

print(tokenizer.decode(beam_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy studying deep learning for natural language processing, and I'm excited to see how it can be applied to real-world applications.

What is Deep Learning?

Deep learning is a type of artificial intelligence (AI) that can be applied to real-world applications. Deep learning is a type of artificial intelligence (AI) that can be applied to real-world applications.

Deep learning is a type of artificial intelligence (AI) that can be applied to real-world applications

While the result is arguably more fluent, the output still includes repetitions of the same word sequences.

A simple remedy is to introduce n-grams penalties as introduced by Paulus et al. (2017) and Klein et al. (2017).

The most common n-grams penalty makes sure that no n-gram appears twice by manually setting the probability of next words that could create an already seen n-gram to 0.

Setting

no_repeat_ngram_size=2to prevent 2-gram repetitions: By including this parameter in the generate function, we ensure that no 2-gram appears twice in the generated text.

# set no_repeat_ngram_size to 2

beam_output = model.generate(

input_ids,

max_length=100,

num_beams=5,

no_repeat_ngram_size=2,

early_stopping=True,

)

print("Output:\n" + 100 * "-")

print(tokenizer.decode(beam_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy studying deep learning for natural language processing, and I'm excited to see how it can be applied to real-world applications.

In this post, I'll show you how you can use Deep Learning to build a neural network that can learn to read and write a sentence. In this article, you'll learn how to use the Deep Neural Network (DNN) to learn a language. You'll also learn about how the DNN works and what you need to do to get started

Improved output: The generated text no longer contains repeated 2-grams, which results in a more coherent output. However, it’s important to use n-gram penalties with caution, as they can prevent the repetition of important phrases. For example, a text about “New York” should not have a 2-gram penalty, as it would limit the number of times the city’s name appears.

Comparing and selecting the best beam: To compare the top beams after generation and choose the best one, set the

num_return_sequencesparameter to the desired number of highest-scoring beams to return. Ensure thatnum_return_sequences <= num_beams.

# set return_num_sequences > 1

beam_outputs = model.generate(

input_ids,

max_length=100,

num_beams=5,

no_repeat_ngram_size=2,

num_return_sequences=3,

early_stopping=True,

)

# now we have 3 output sequences

print("Output:\n" + 100 * "-")

for i, beam_output in enumerate(beam_outputs):

print("{}: {}".format(i, tokenizer.decode(beam_output, skip_special_tokens=True)))

Output:

----------------------------------------------------------------------------------------------------

0: I enjoy studying deep learning for natural language processing, and I'm excited to see how it can be applied to real-world applications.

In this post, I'll show you how you can use Deep Learning to build a neural network that can learn to read and write a sentence. In this article, you'll learn how to use the Deep Neural Network (DNN) to learn a language. You'll also learn about how the DNN works and what you need to do to get started

1: I enjoy studying deep learning for natural language processing, and I'm excited to see how it can be applied to real-world applications.

In this post, I'll show you how you can use Deep Learning to build a neural network that can learn to read and write a sentence. In this article, you'll learn how to use the Deep Neural Network (DNN) to learn a language. You'll also learn about how the DNN works and what you need to know about it to

2: I enjoy studying deep learning for natural language processing, and I'm excited to see how it can be applied to real-world applications.

In this post, I'll show you how you can use Deep Learning to build a neural network that can learn to read and write a sentence. In this article, you'll learn how to use the Deep Neural Network (DNN) to learn a language. You'll also learn about how the DNN works and what you need to know to get started

Analyzing the results: The output shows three different generated sequences, each being a top-scoring beam. The differences between these beams might be marginal, especially when using a small number of beams (e.g., 5). By examining these alternatives, you can select the one that best fits your requirements.

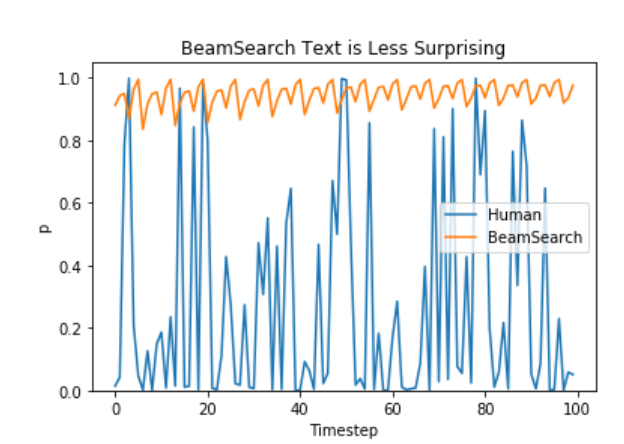

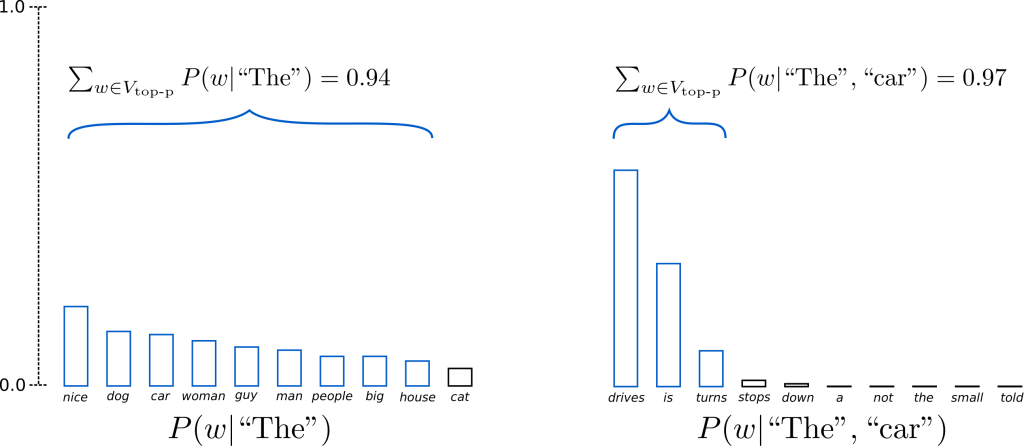

In open-ended text generation tasks, there are several reasons why beam search might not be the most suitable option:

Predictable length vs. varying length: Beam search tends to perform well in tasks where the desired length of the generated output is more or less predictable, such as machine translation or summarization. However, in open-ended generation tasks like dialog and story generation, the desired output length can vary significantly, making beam search less optimal.

Repetitive generation: Beam search often results in repetitive text generation. This issue can be particularly challenging to control in tasks like story generation, where applying n-gram or other penalties to prevent repetition may require extensive fine-tuning to strike the right balance between avoiding repetitive phrases and forcing unnatural “no-repetition” constraints.

Human-like language generation: High-quality human language typically contains a mix of both predictable and surprising elements. In other words, as humans, we appreciate generated text that is not too predictable or boring. According to a study by Ari Holtzman et al. (2019), beam search tends to favor high-probability words, which may lead to less engaging and less human-like text generation.

In summary, while beam search can be effective for certain text generation tasks, its limitations in handling varying output lengths, repetitiveness, and the need for more surprising and engaging text make it less suitable for open-ended generation tasks like dialog and story generation.

Fig. 92 Beam Search vs Human#

Sampling#

Sampling means randomly picking the next word \(w_t\) according to its conditional probability distribution:

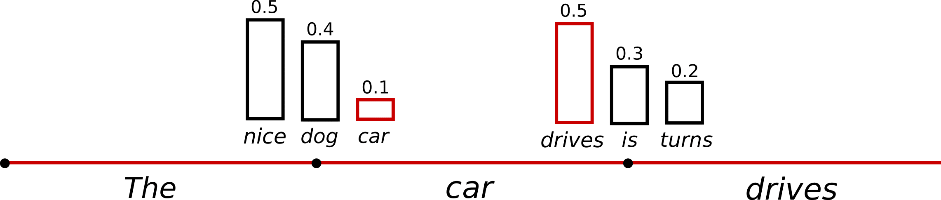

The following graphic visualizes language generation when sampling.

Fig. 93 Sampling Search#

In contrast to deterministic methods like greedy search, language generation using sampling introduces an element of randomness.

For example, given the starting word “The”, the next word “car” is sampled from the conditional probability distribution \(P(w | \text{"The"})\). Following this, the word “drives” is sampled from the distribution \(P(w | \text{"The"}, \text{"car"})\).

This stochastic approach results in a more diverse range of generated text compared to deterministic methods, as it allows for the exploration of various word combinations based on their conditional probabilities.

In the following code snippet, we set do_sample=True and deactivate Top-K sampling via top_k=0. By doing this, we enable sampling for generating text:

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# activate sampling and deactivate top_k by setting top_k sampling to 0

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=100,

top_k=0,

)

print("Output:\n" + 100 * "-")

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy studying deep learning for natural language processing, especially to see how it does useful things over evolutionary time once you shift the ideology of theoretical language. I also really like developing algorithmically tailored introductory programming books to teach my students, but I simply don't have the spare time to do all that I did before. HALAX fight3r also released their original issue warning the public that Gaming with Game Software may be detrimental to your solidified web skills and appearances, likely to undermine your beliefs and help

While the generated text seems fine at first glance, a closer look reveals that it lacks coherence and contains words that do not sound natural. This incoherent gibberish is a common problem with sampling word sequences.

To address this issue, we can adjust the temperature of the softmax function. This modification sharpens the distribution \(P(w|w\_{1:t-1})\), increasing the likelihood of high probability words and decreasing the likelihood of low probability words. The following code snippet demonstrates how to apply a temperature of 0.7:

Fig. 94 Sampling Search with Temperature#

Note

Temperature is a hyperparameter used in language generation models to control the randomness and diversity of the generated text. It is applied to the softmax function, which converts logits (the raw model outputs) into probabilities. The temperature essentially adjusts the sharpness of the probability distribution over the possible next words in a sequence.

When the temperature is high (e.g., greater than 1), the model’s output probabilities become more uniform, meaning that the generated text will be more diverse and random, sometimes even incoherent. High temperature values encourage the model to explore less likely words and make it more creative.

On the other hand, when the temperature is low (e.g., less than 1), the probability distribution becomes more focused, and the model becomes more conservative. The generated text will be more coherent and predictable, with a higher probability of choosing the most likely words. However, when the temperature is too low (approaching 0), the generated text may become too repetitive and less interesting, resembling the output of greedy decoding.

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# use temperature to decrease the sensitivity to low probability candidates

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=100,

top_k=0,

temperature=0.7,

)

print("Output:\n" + 100 * "-")

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy studying deep learning for natural language processing, but this is a simplistic example of how the language can be applied to a complex problem.

Using more complex architectures

The most common approach is to look at a whole set of ML designs that are quite complex and require a lot of work to deploy. For example, the first ML project I'm learning is the human language learning project. Let's take a look at the human-language learning project.

The current human-language

By applying a temperature of 0.7, the generated text becomes less random and more coherent, with fewer unnatural n-grams. However, it’s important to note that as the temperature approaches 0, the sampling process becomes similar to greedy decoding, which comes with its own set of issues, such as a lack of diversity in the generated text.

Top-K Sampling#

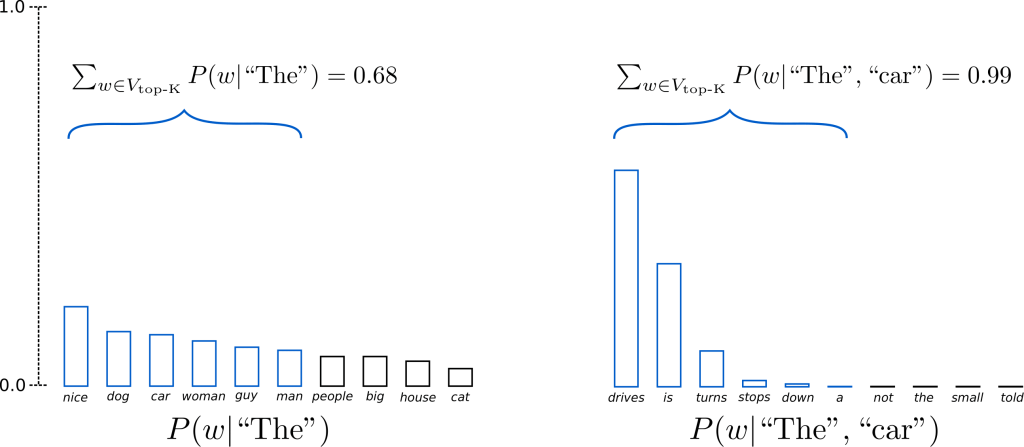

Fig. 95 Top-K Sampling#

Top-K sampling is a technique used in language generation models to make the generated text more coherent and meaningful. In Top-K sampling, only the \(K\) most likely next words are considered, and the probability mass is redistributed among these \(K\) next words.

Setting \(K=6\), for example, limits our sampling pool to the top 6 words in each step. This can eliminate unlikely candidates, making the generated text more human-like. However, one concern with Top-K sampling is that it doesn’t adapt dynamically to the number of words filtered from the next word probability distribution \(P(w|w_{1:t-1})\). This could lead to problems, as some words might be sampled from a very sharp distribution, while others from a much flatter distribution.

Let’s see how Top-K can be used in the library by setting top_k=50:

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# set top_k to 50

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=100,

top_k=50,

)

print("Output:\n" + 100 * "-")

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy studying deep learning for natural language processing, as well as in terms of learning from experience. As well as having my students who are learning an intermediate language and learn how to use it in a meaningful way or with a minimal effort, I can also focus on learning programming languages like C++ (though I wouldn't go so far as to call that "advanced"), Python (for those that aren't familiar with Python) or Java (I think I actually want to see what I can learn

The generated text appears to be more human-sounding than before. However, using a fixed K size can have drawbacks:

It could limit the model’s creativity for flatter probability distributions, as it might filter out reasonable candidates that could have led to more diverse and interesting text.

For sharper distributions, it could include ill-fitted words in the sample pool, endangering the model to produce gibberish.

To address these concerns, Ari Holtzman et al. (2019) introduced Top-p or nucleus sampling, which dynamically selects the \(K\) value based on a threshold probability \(p\), leading to a more adaptive approach.

Top-p (nucleus) sampling#

Fig. 96 Top-p Sampling#

Top-p sampling, also known as nucleus sampling, is a technique that chooses words from the smallest possible set whose cumulative probability exceeds a predefined threshold probability p. The probability mass is then redistributed among this set of words. This approach allows the size of the word set to dynamically increase and decrease according to the next word’s probability distribution.

In Top-p sampling, the model selects the minimum number of words needed to exceed the probability mass p. For example, with p=0.92, the model selects the most likely words whose cumulative probability is at least 92%. This method keeps a wide range of words when the next word is less predictable and narrows down the selection when the next word seems more predictable.

To activate Top-p sampling, set 0 < top_p < 1:

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# deactivate top_k sampling and sample only from 92% most likely words

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=100,

top_p=0.92,

top_k=0,

)

print("Output:\n" + 100 * "-")

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy studying deep learning for natural language processing. Learn more at lecture.lightlink.com.

Ciao — Social conscious "synchronization" between conscious and nonconscious participants at the Cognition Experience. Learn more at lecture.lightlink.com.

Everett's Processio

A video discussion

The Evolution of Mindfulness in Today's Economy

The Recent Advances in the Study of Mindfulness and Success in Society. The results from St Louis University

The generated text appears more human-like. In practice, both Top-p and Top-K sampling work well. You can also combine Top-p with Top-K sampling, which can prevent the selection of very low-ranked words while allowing for some dynamic word selection.

To get multiple independently sampled outputs, set the parameter num_return_sequences > 1:

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# set top_k = 50 and set top_p = 0.95 and num_return_sequences = 3

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=100,

top_k=50,

top_p=0.95,

num_return_sequences=3,

)

print("Output:\n" + 100 * "-")

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

Output:

----------------------------------------------------------------------------------------------------

0: I enjoy studying deep learning for natural language processing, as I've learned it quickly enough for me to understand it. The idea of modeling learning comes from both of these worlds. The first is that of natural language processing and the second is a mathematical theory that allows you to draw meaningful conclusions. To understand what is in the world of Natural Language Processing, go to the book by Mike Biederman of the University of Toronto or check out his website at www.layers.com. You are also

1: I enjoy studying deep learning for natural language processing. My favourite part, even though it is just about my main job, is learning to play a game. That is exactly what I am doing on my website. All this is part of my job as a developer and I love playing games.

My job with this company was to create a website where my students could explore the world as they would enjoy learning about computer science, physics, or any other science.

In doing so, I

2: I enjoy studying deep learning for natural language processing. In my experience it doesn't help my training to teach my students how to do it, but it's an awesome source for training and has led to many exciting discoveries about Deep Learning and neural nets in general.

Learning to code

Another way to learn Deep Learning is by coding. For example, I often code to solve problem A and then use it for the solving of problem B. This is where the language really comes in. I

The output consists of multiple independently sampled sequences, which can be further analyzed or used according to specific requirements.

Summary#

Various decoding or search strategies are used in open-ended language generation tasks. Here’s an overview of the most common strategies and their characteristics:

Greedy Search:

At each time step, Greedy Search selects the word with the highest predicted probability to follow the previous word.

One of the main issues with Greedy Search is that it may miss high-probability words at a certain time step if they are preceded by a low-probability word in the previous step.

This method often generates repetitive and predictable text, which may not resemble natural human language.

Beam Search:

Beam Search tracks the n-th (num_beams) most likely word sequences and ultimately outputs the most likely sequence.

While this method may seem promising, it struggles when the output length can be highly variable, as is the case with open-ended text generation.

Like Greedy Search, Beam Search can also produce repetitive and uninteresting text that doesn’t align with the way humans perform the same task.

Sampling With Top-k + Top-p:

This strategy combines three methods to address the issues faced by Greedy and Beam Search.

Sampling involves choosing the next word randomly based on its conditional probability distribution.

In Top-k sampling, the model selects the k most likely words and redistributes the probability mass among them before choosing the next word.

Top-p sampling adds an additional constraint to Top-k by choosing words from the smallest set whose cumulative probability exceeds p.

While these methods produce more fluent and human-like text, they may still generate repetitive word sequences.

Recent research indicates that the flaws of Greedy and Beam Search, mainly generating repetitive word sequences, are caused by the model itself rather than the decoding method. Top-k and Top-p sampling also suffer from generating repetitive word sequences, but they often provide better results in open-ended language generation tasks compared to Greedy and Beam Search.

Prompt Engineering#

The Career of Future

With the No-Code revolution on the horizon and the advent of new-age technologies like GPT-3, we may see a significant difference between the careers of today and those of tomorrow…

When designing training prompts, the goal is to obtain a zero-shot response from the model. If that isn’t possible, consider providing a few examples rather than an entire corpus. The standard workflow for training prompt design should follow this order: Zero-Shot → Few-Shot → Corpus-based Priming.

Step 1: Clearly define the problem you want to solve and categorize it into one of the possible natural language tasks, such as classification, Q&A, text generation, creative writing, etc.

Step 2: Determine if it’s possible to obtain a solution using a zero-shot approach (i.e., without priming the GPT-3 model with any external training examples).

Step 3: If you believe that external examples are necessary to prime the model for your use case, revisit Step 2 and carefully reconsider the need for additional examples.

Step 4: Consider how the problem might be encountered in a textual format, given GPT-3’s “text-in, text-out” interface. Contemplate various ways to represent your problem using text.

Step 5: If you decide to use external examples, use as few as possible while ensuring diversity in your examples. This helps avoid overfitting the model or skewing its predictions.

By following these steps, you can effectively design prompts that address specific problems while minimizing the need for additional training examples, resulting in more accurate and efficient outcomes.